Machine Learning data comes in a wide range of formats such as videos, images, sound clips, text and sensor data. For Machine Learning purposes, regardless of the data format, all data can be considered as arrays of numbers. Images for example can be viewed as three-dimensional arrays containing RGB values for each pixel. Sensor data can be thought of as one-dimensional arrays of sensor values versus time. Our first step in analyzing data, regardless of the format, is to transform the data into arrays of numbers. This article introduces NumPy, a vital Machine Learning tool, and how it is levered to provide efficient storage and manipulation of numerical arrays.

Python built-in lists

Data types in Python are dynamically inferred, as oppose to C-based languages which require variable data types to be explicitly declared. This is one of the attractive aspects of Python, which contributes to its flexibility and ease of use. Thanks to this dynamic typing approach, Python lists can hold variables of different types.

In [1]: numbers = [1, 'two', 3]

In [2]: [type(element) for element in numbers]

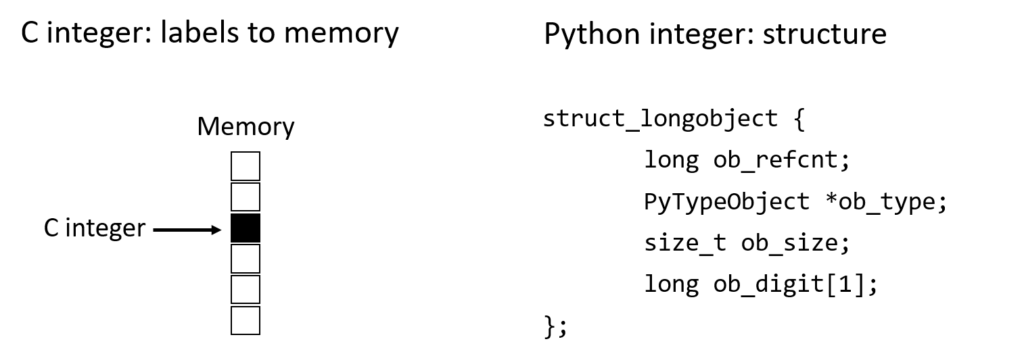

Out[2]: [int, str, int]Dynamic typing however requires that even a simple integer variable carries overhead information to support type inference during program execution. Compared to an integer in C/C++, which is simply a label pointing to an area of memory, a Python integer is a structure which carries information required to perform dynamic typing.

Carrying this additional information comes at a cost, especially when creating large arrays containing a significant number of objects. For this reason, Python’s built-in list object is not suitable for storing and manipulating large quantities of Machine Learning data.

The NumPy ndarray

The solution to the challenges described above is the NumPy ndarray object, which provides a fixed-type data buffer combined with an interface for performing efficient operations on the data. This trades the flexibility of dynamic typing for computational efficiency, making it an ideal solution for Machine Learning. There are several ways to create a NumPy array, the easiest of which is using a Python list. Note that the data type is common for the whole NumPy array, accessed via the dtype attribute.

In [3]: import numpy as np

In [4]: array = np.array([1, 2, 3, 4])

In [5]: array

Out[5]: array([1, 2, 3, 4])

In [6]: array.dtype

Out[6]: dtype('int64')The data type of the NumPy array can be explicitly set using the dtype keyword.

In [7]: np.array([1, 2.3, 4, 9.8], dtype='float32')

Out[7]: array([1. , 2.3, 4. , 9.8], dtype=float32)Often in Machine Learning, the required size of the array is known but the elements are unknown. NumPy provides functions which allow arrays to be initialized to predetermined dimensions with placeholder content.

In [8]: np.zeros((2, 3))

Out[8]:

array([[0., 0., 0.],

[0., 0., 0.]])NumPy’s reshaping functions are valuable when the shape of datasets needs to be transformed, for example changing a 1D array into a 2D array.

In [9]: np.arange(9).reshape(3, 3)

Out[9]:

array([[0, 1, 2],

[3, 4, 5],

[6, 7, 8]])In addition to dynamic typing, the other factor that slows Python down is the way operations are performed on loops. If a for-loop is used to perform a computation on each element of an array, Python inspects the element’s type then performs a dynamic lookup of the correct function to use for that type. This is unnecessary when working with arrays of uniform type. The key to achieving computational speed with NumPy’s arrays is to use vectorized operations rather than loops. NumPy’s means of achieving this is called Universal Functions, also known as UFuncs, which are statically typed, compiled routines. The idea is to put the looping into the compiled layer behind NumPy, rather than the interpreted approach taken by Python. Operations are performed on the array itself, which are then applied to each element.

In [10]: array = np.array([1, 2, 3, 4])

In [11]: np.add(array, 2)

Out[11]: array([3, 4, 5, 6])

In [12]: np.sin(array)

Out[12]: array([ 0.84147098, 0.90929743, 0.14112001, -0.7568025 ])The final aspect of NumPy to mention is Fancy Indexing. NumPy supports the regular indexing and slicing operations which are well known to Python developers. In addition, NumPy offers a means of accessing arrays using arrays of indices rather than single scalars. This enables quick access and modification of complicated array subsets. The key with Fancy Indexing is that the shape of the result reflects the shape of the index arrays rather than the shape of the array being indexed.

In [13]: array = np.arrange(9)**2

In [14]: array

Out[14]: array([ 0, 1, 4, 9, 16, 25, 36, 49, 64])

In [15]: index = np.array([[0, 3], [8, 4]])

In [16]: array[index]

Out[16]:

array([[ 0, 9],

[64, 16]])Conclusion

The driver behind NumPy is to provide efficient storage and manipulation of data in memory, overcoming the slowness of Python’s built-in containers caused by dynamic typing and looping. This article has touched on the key aspects of NumPy’s interface and it’s applicability in Machine Learning.