Deep Learning is unquestionably the most powerful object detection tool. The downside however of Deep Learning is the large quantity of training images required and the computational horsepower necessary to crunch them. When detecting objects which are consistent, such as the presence of a logo in a cluttered scene, Deep Learning can be overkill. This article demonstrates the use of keypoint detectors and local invariant descriptors to perform object detection with no more than 30 lines of Python.

The objective

Consider the scene below. Our objective is to detect and locate the water bottle logo in a scene of other objects.

Keypoint detection

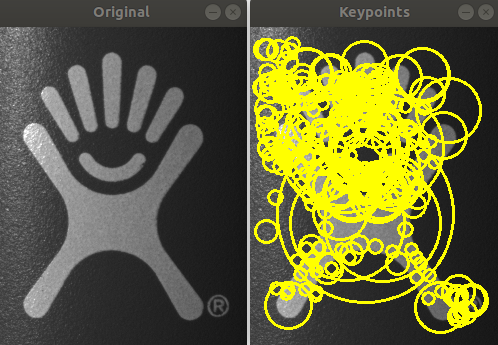

Keypoint detectors use corners, edges and blobs to find low level features in images. Many techniques are available for keypoint detection, two of the most well-known being the FAST keypoint detector and the Harris keypoint detector. The following Python code uses OpenCV to identify keypoints in our water bottle logo with the Fast Hessian keypoint detector.

import numpy as np

import cv2

# load the grayscale image and take the first channel

image = cv2.imread("./logo-cropped.png")

orig = image.copy()

gray = cv2.split(image)[0]

# setup the keypoint detector and extract the keypoints

detector = cv2.xfeatures2d.SURF_create()

(kps, _) = detector.detectAndCompute(gray, None)

# draw the keypoints

for kp in kps:

r = int(0.5 * kp.size)

(x, y) = np.int0(kp.pt)

cv2.circle(image, (x, y), r, (0, 255, 255), 2)

# display the image

cv2.imshow("Original", orig)

cv2.imshow("Keypoints", image)

cv2.waitKey(0)

Object detection

Having identified keypoints in our logo, we can use local invariant descriptors to describe our logo and detect its presence in a cluttered scene. A local invariant descriptor extracts a feature vector for each keypoint, describing the immediate region surrounding the keypoint. In this example, we’ll use Fast Hessian to detect the keypoints followed by the RootSIFT algorithm to extract (describe) the keypoints. This first section of code sets up the keypoint detector, extractor and matcher then performs the extraction. The purpose of the matcher is to match the keypoints between the logo image and the cluttered scene.

import numpy as np

import cv2

from imutils.feature.factories import FeatureDetector_create, DescriptorExtractor_create, DescriptorMatcher_create

# setup keypoint detector, extractor and matcher

detector = FeatureDetector_create("SURF")

extractor = DescriptorExtractor_create("RootSIFT")

matcher = DescriptorMatcher_create("BruteForce")

# load the two grayscale images and take the first channel

logo = cv2.imread("./logo-cropped.png")

scene = cv2.imread("./cluttered-scene.png")

grayLogo = cv2.split(logo)[0]

grayScene = cv2.split(scene)[0]

# detect keypoints

kpsLogo = detector.detect(grayLogo)

kpsScene = detector.detect(grayScene)

# extract features

(kpsLogo, featuresLogo) = extractor.compute(grayLogo, kpsLogo)

(kpsScene, featuresScene) = extractor.compute(grayScene, kpsScene)

Having identified the keypoints in both images and extracted features to describe the keypoints, we can proceed to match the keypoints in the logo with those in the cluttered scene. This is done using a k-nearest neighbour comparison, which we ask to provide the top two matches for each keypoint descriptor. Using the top two matches, applying Lowe’s ratio test ensures that our matches are indeed high quality matches and not false positives. Lowe’s ratio test achieves this by checking that the closest match is significantly closer than the first incorrect match.

# match the keypoints

rawMatches = matcher.knnMatch(featuresLogo, featuresScene, 2)

matches = []

if rawMatches is not None:

for match in rawMatches:

if len(match) == 2 and match[0].distance < match[1].distance * 0.8:

matches.append((match[0].trainIdx, match[0].queryIdx))

The resulting image shows that the logo has been successfully detected and located in a cluttered scene with no false positive matches.

Conclusion

When detecting objects which vary considerably, such as the presence of a piece of fruit, Deep Learning computer vision techniques are capable of achieving very high accuracy. For objects with minimal variation, such as logo detection, keypoints and local invariant descriptors provide a simple but powerful detection tool which can achieve impressive results with a single training image.