Setting up development environments for Deep Learning can be time consuming and frustrating. Open source neural network libraries such as TensorFlow and PyTorch are notoriously difficult to get running, especially when GPUs are involved, requiring very specific versions of supporting libraries and drivers. In the context of computer vision, the environment challenge also applies when the Deep Learning model is deployed as the hardware used to perform inference is often very different to the hardware used for training. This article reviews the use of Docker containers to make Deep Learning environments more portable and easier to use.

Virtual Machines

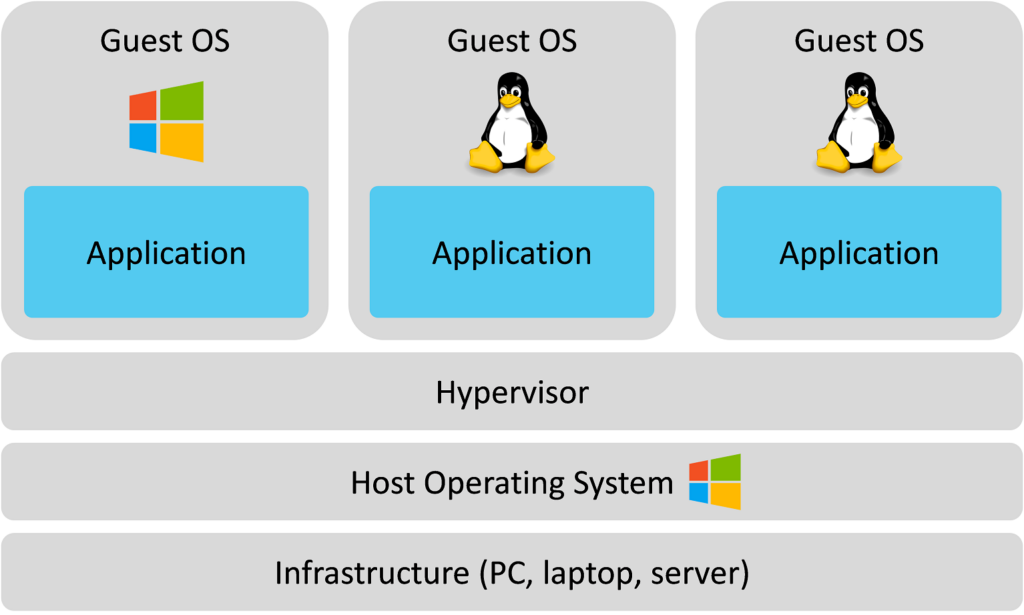

Neural network training workflows require a lot of supporting libraries. Different Deep Learning models require specific versions of tools such as TensorFlow and CUDA, often creating version conflicts on a development machine. One solution to this is to use virtual machines to provide isolated development environments on the same computer. Virtual machines allow entire guest operating systems to run on top of the host operating system. Different operating systems can be combined for example a Linux virtual machine can be ran on a Windows computer, providing a self-contained Linux development environment. The virtual machine can therefore act as a development sandbox in which application development tools can be setup independent of the host operating system. Tools such as VirtualBox and VMware are used to facilitate virtual machine creation and management.

The drawback of virtual machines is their size and resource requirement. As the entire guest OS is virtualized, the size of a virtual machine can be tens of gigabytes, making them cumbersome to store and transfer between developers. Additionally, when a virtual machine is running it consumes considerable computational resources as it requires its own CPU and memory allocation.

Docker Containers

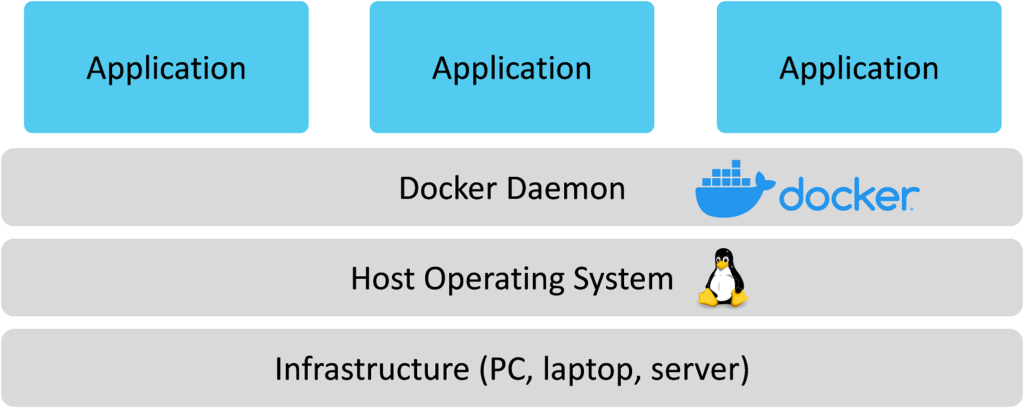

Docker containers provide much of the isolation benefits of a virtual machine but require far less computational resource. Rather than virtualizing an entire guest operating system for each separate environment, the Docker daemon runs special images which provide the required isolation between applications. Furthermore, the Docker daemon levers the host operating system resources for the container, rather than virtualizing separate hardware for a guest operating system. Containers can be as small as a few megabytes and boot up in milliseconds, making them very portable.

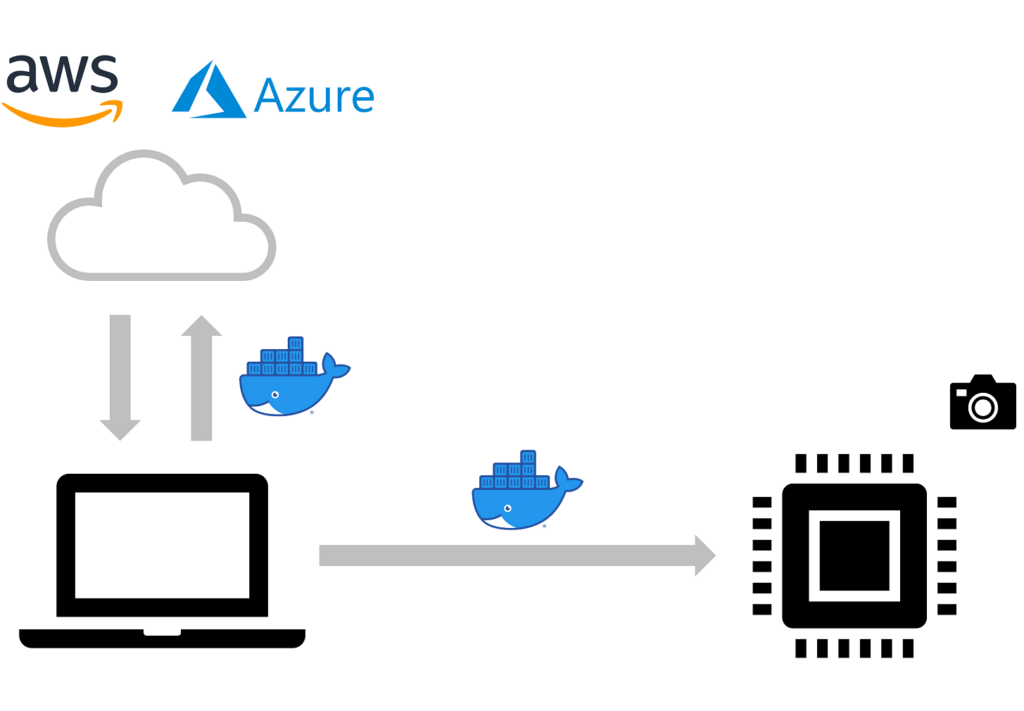

Each container can be considered as a portable, isolated sandbox which provides all of the libraries and tools required to develop and run a given application. The sandbox can be moved around between developers and deployed to application hardware without having to manually install supporting libraries and manage their versions. At Agmanic Vision we use Docker containers to speed up development environment setup and deployment. We train Deep Learning models in cloud-based containers, levering powerful GPUs, then deploy the same containers to edge devices.

Conclusion

Docker containers offer a lightweight and easier to use alternative to virtual machines whilst providing most of the isolation benefits. Their portability significantly speeds up Deep Learning training and deployment workflows.