Industrial vision systems often fall into two categories: PC-based and smart cameras. A lesser known approach, at least in industrial contexts, is embedded vision. This article describes what embedded vision is and how it compares with PC-based vision and smart cameras. Before diving into embedded vision systems, let’s first review the differences between PC-based vision and smart cameras.

PC-based Vision

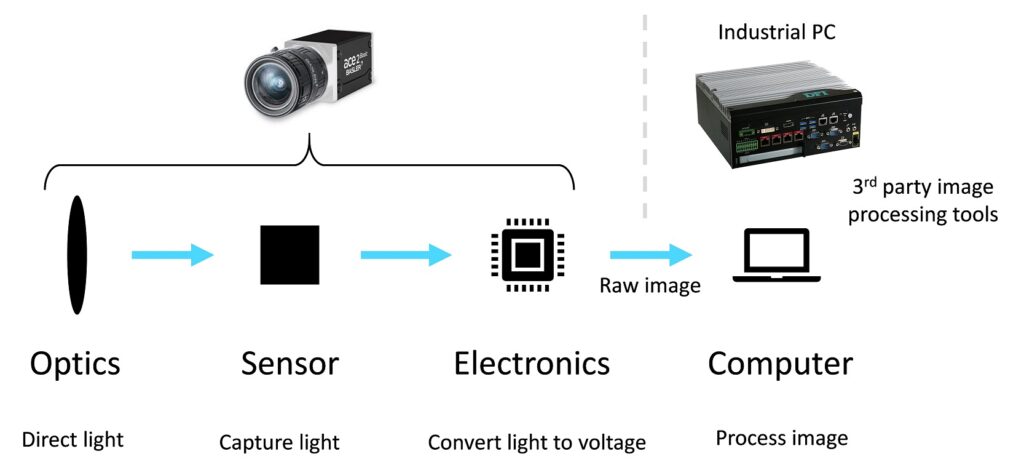

With a PC-based vision system, the image acquisition is performed by what is often known as a “dumb camera”. This term reflects the fact that very little processing is performed onboard the camera. In addition to the lens, a dumb camera contains an imaging sensor which captures light and limited electronic circuitry to convert the light energy into voltage levels. The raw image data is then transferred from the dumb camera to a PC via a protocol such as GigE Vision or USB3. The PC is typically an industrial version of a desktop PC, often running Windows. It’s the vision system integrator’s responsibility to then build an application which runs on the PC using third party image processing libraries and software development tools. The development environment remains very close to that of mainstream software development, programming in high-level languages such as C# and F# using well known tools such as Microsoft Visual Studio.

Smart Cameras

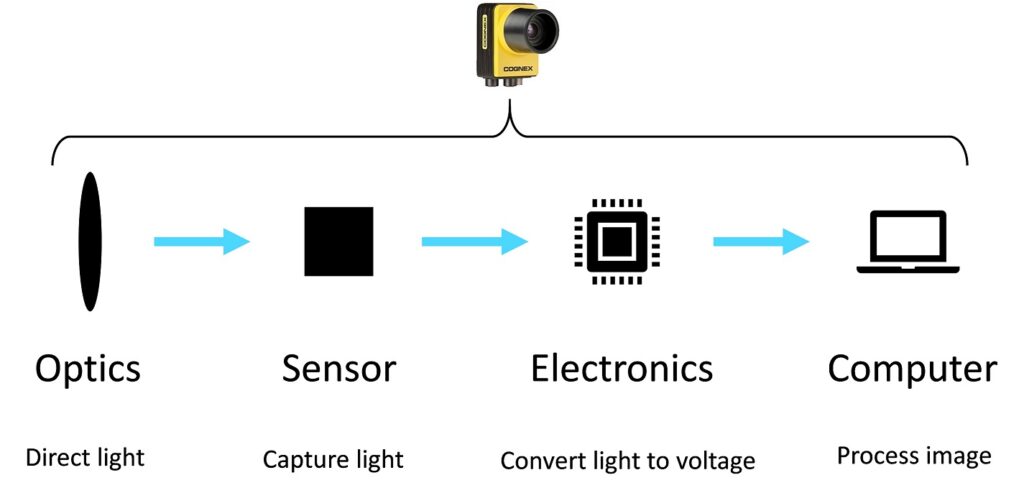

Smart cameras integrate the PC’s workload into one self-contained camera unit which can operate independently. Some smart cameras even have integrated lighting and a small number of input/output terminals for working with peripheral devices. The smart camera is therefore highly convenient, as very little integration is required, however they are costly and almost impossible to customize if additional functionality is needed. Smart cameras are configured using a pre-defined set of tools, rather than programmed using a programming language. This configuration is usually performed via a graphical interface provided by the smart camera vendor.

Embedded Vision

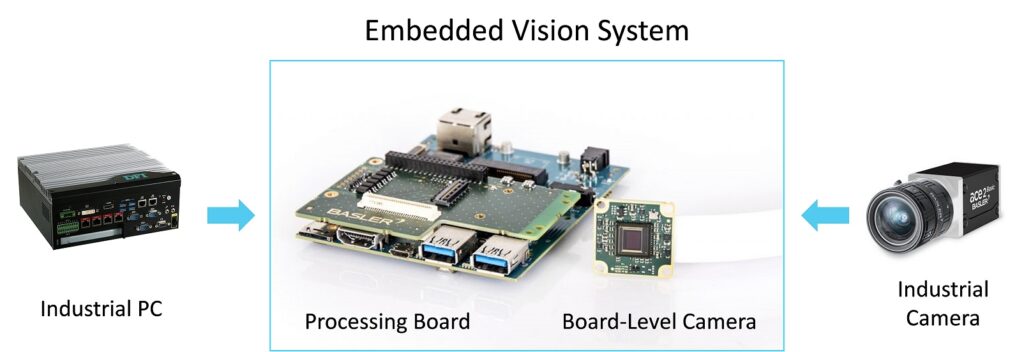

Now let’s look at embedded vision. The term embedded comes from the embedded systems domain which refers to electronics and software integrated directly into a product, as oppose to running on a separate desktop PC or a server. Examples of embedded systems include car engine controllers, avionics and smart home devices. In the context of a vision system, a small processing board replaces the PC and a bare-bones camera known as a board-level camera replaces the dumb camera. As illustrated below, these are lower-level components which require integrating and packaging into a product. For example, an embedded processing board and a board-level camera could be directly integrated into the housing of a robot, rather than having a separately mounted PC and camera.

The main advantages of an embedded vision system are hardware cost savings, space savings and power savings. Embedded vision hardware costs as little as $200 – $300 per instance, as oppose to several thousands of dollars for a full-blown vision PC and camera. There are however a number of challenges and points to consider before adopting embedded vision technology.

The heart of an embedded processing board is a System on Module (SoM). This is an electronic board which contains the key elements found in a PC (processor, memory, storage etc.) but more compactly packaged. A SoM fits into a carrier board which provides external connections and interfacing electronics. Sometimes the SoM and carrier board come as a single unit, known as a Single Board Computer – the Raspberry Pi is a well-known example of a Single Board Computer. An example of an industrial-grade SoM which is suitable for embedded vision is Toradex’s Verdin iMX8M Plus which provides seamless integration with embedded camera modules. Most SoMs use ARM CPUs rather than the traditional x86 processors used in PCs. For this reason, embedded systems often use Linux as the operating system, in part for its small footprint and configurability but also because until recently Windows has provided limited support for ARM processors. Embedded Linux development is more specialized and complicated than mainstream PC-based software development. Embedded programming is often performed in C/C++ with tools such as Eclipse and Vim. Due to the resource-constrained nature of embedded systems, development is typically done on a separate laptop using cross-compilation which results in a more complex toolchain for programming and debugging. This considered, more specialized skills and tools are required to implement an embedded vision system.

Conclusion

The cost, space and power savings of embedded vision are significant. This must be carefully considered against the convenience of PC-based development, which offers a broader set of easier to use tools. This situation is however gradually changing as mainstream software development tools such as .NET and Windows IoT are starting to make their way to the embedded system word.